Chris is the new columnist for Automated Trader Magazine.

C'mon Chris .... when are you going to get that venture funding!

©2006 Marc Adler - All Rights Reserved

Wednesday, December 27, 2006

Tuesday, December 26, 2006

Penn-Lehman Automated Trading Project

Here

Plus, an internship at the Prop Trading group at Lehman.

Another reason to make sure that your kids study hard for the SATs.

©2006 Marc Adler - All Rights Reserved

Plus, an internship at the Prop Trading group at Lehman.

Another reason to make sure that your kids study hard for the SATs.

©2006 Marc Adler - All Rights Reserved

News Mining Agent for Automated Stock Trading

Here is a thesis on semantic recognition of financial news items.

... as we slowly move towards phasing out traders (as per IBM's prediction).

©2006 Marc Adler - All Rights Reserved

... as we slowly move towards phasing out traders (as per IBM's prediction).

©2006 Marc Adler - All Rights Reserved

Coding for Humanity

At this time of year, one's thoughts sometimes turn to the larger things in life. You might ask yourself what your legacy is going to be. Does your coding and architecture skills somehow contribute to the greater good of the world, and will humanity benefit from your efforts?

I never put this on my resume, but I consulted part-time for 4 1/2 years for a company called Classroom Inc. From January 1997 to October 2001, I would devote part of my time to writing "simulations" for CRI, and probably over one million school children have used my programs.

Classroom Inc (CRI) was originally formed as a non-profit partnership between IBM, JP Morgan, and Bear Stearns. Lewis Bernard, who was very high up at JPMC, was the CEO of Classroom Inc. The mission was to provide education computer software to inner-city and rural schools where the children could benefit from an "alternative learning experience".

Each one of the "simulations" was an interactive game, where the student was put in a certain life situation. For example, one simulation put you in the role of a bank manaer, while another one put you in the role as the CEO of a paper company. Each simulation consisted of 12 or 15 "chapters", where each chapter was devoted to a certain issue.

When I started consulting for CRI, each simulation went out to over 100,000 students, and by the time I finished up, each simulation reached more than 250,000 students.

The entire framework was written in MFC/C++. A lot of the internal design came from the old Macromedia Director, which was very popular at the time for creating interactive storyboards. A typical simulation took 6 to 8 months to write. The team consisted of a producer, 2 writers, 2 artists, a QA tester, and myself. Every few weeks, I would get a ZIP file in the mail consisting of the script for a new chapter, and all of the graphics, plus some haphazard directions for one or more "activities" that the kids would have to do in the chapter.

My involvement with CRI ended in 2001 when IBM, who was one of the partners, decided that they wanted to move to a new, internal Flash-based system, and wanted to end all C++ development. It took the IBM consultants quite a while to get the first simulation out there in Flash, but they finally managed to recreate all of the simulations and re-release them.

I was proud to have been a part of this effort for such a long time.

I was inspired to write this by a post that was on Joel on Software a few weeks ago. Joel talked about some cool new jobs that his jobsite was advertising, and one of the jobs dealt with medical technology, which is one of the noblest enterprises. Another worthy position is at DonorsChoice, which reminds me a bit of CRI.

©2006 Marc Adler - All Rights Reserved

I never put this on my resume, but I consulted part-time for 4 1/2 years for a company called Classroom Inc. From January 1997 to October 2001, I would devote part of my time to writing "simulations" for CRI, and probably over one million school children have used my programs.

Classroom Inc (CRI) was originally formed as a non-profit partnership between IBM, JP Morgan, and Bear Stearns. Lewis Bernard, who was very high up at JPMC, was the CEO of Classroom Inc. The mission was to provide education computer software to inner-city and rural schools where the children could benefit from an "alternative learning experience".

Each one of the "simulations" was an interactive game, where the student was put in a certain life situation. For example, one simulation put you in the role of a bank manaer, while another one put you in the role as the CEO of a paper company. Each simulation consisted of 12 or 15 "chapters", where each chapter was devoted to a certain issue.

When I started consulting for CRI, each simulation went out to over 100,000 students, and by the time I finished up, each simulation reached more than 250,000 students.

The entire framework was written in MFC/C++. A lot of the internal design came from the old Macromedia Director, which was very popular at the time for creating interactive storyboards. A typical simulation took 6 to 8 months to write. The team consisted of a producer, 2 writers, 2 artists, a QA tester, and myself. Every few weeks, I would get a ZIP file in the mail consisting of the script for a new chapter, and all of the graphics, plus some haphazard directions for one or more "activities" that the kids would have to do in the chapter.

My involvement with CRI ended in 2001 when IBM, who was one of the partners, decided that they wanted to move to a new, internal Flash-based system, and wanted to end all C++ development. It took the IBM consultants quite a while to get the first simulation out there in Flash, but they finally managed to recreate all of the simulations and re-release them.

I was proud to have been a part of this effort for such a long time.

I was inspired to write this by a post that was on Joel on Software a few weeks ago. Joel talked about some cool new jobs that his jobsite was advertising, and one of the jobs dealt with medical technology, which is one of the noblest enterprises. Another worthy position is at DonorsChoice, which reminds me a bit of CRI.

©2006 Marc Adler - All Rights Reserved

Automatic Resource Refactoring Tool

Here

Move all of your hard-coded strings automatically into resource files.

©2006 Marc Adler - All Rights Reserved

Move all of your hard-coded strings automatically into resource files.

©2006 Marc Adler - All Rights Reserved

Skyler Technology

Yet another entry in the time-series, object-cache, feed handler world ... Skyler Technology's C3 Database. This is definitely a hot area to be in right now, as Skyler, Vhayu, Streambase, etc all seem to be vying for a piece of the pie.

Skyler has a nice use case for order book management.

©2006 Marc Adler - All Rights Reserved

Skyler has a nice use case for order book management.

©2006 Marc Adler - All Rights Reserved

Saturday, December 09, 2006

DevExpress XtraPivotGridSuite

Recommended by a colleague who is very interested in OLAP tools....

How long before Infragistics comes up with something similar?

©2006 Marc Adler - All Rights Reserved

How long before Infragistics comes up with something similar?

©2006 Marc Adler - All Rights Reserved

OLAP/Analysis Services

Had a very interesting presentation from Microsoft on Analysis Services and OLAP.

What are traders and risk managers using OLAP for at your bank?

Any experience using OLAP is a real-time scenario to perform up-to-the-second reporting? Our feeling is that OLAP cannot be used successfully in a real-time environment unless you have very small cubes.

©2006 Marc Adler - All Rights Reserved

What are traders and risk managers using OLAP for at your bank?

Any experience using OLAP is a real-time scenario to perform up-to-the-second reporting? Our feeling is that OLAP cannot be used successfully in a real-time environment unless you have very small cubes.

©2006 Marc Adler - All Rights Reserved

Wednesday, December 06, 2006

FPG911

Brilliant quote from a colleague:

If you need time off from work to test-drive a Porsche, just tell your boss that you are investigating hardware acceleration.

©2006 Marc Adler - All Rights Reserved

If you need time off from work to test-drive a Porsche, just tell your boss that you are investigating hardware acceleration.

©2006 Marc Adler - All Rights Reserved

Less Reliance on Vendors

Even though there are a lot of things to be desired about working at Morgan Stanley, I must say that they have got the right idea about lessening their reliance on vendors. Their EIA group has their own XML-based pub/sub message bus (CPS), their own market data infrastructure (Filter), their own .NET client-side framework, and more. What they have done is cut companies like Tibco out of the loop, and are no longer beholden to vendor release cycles, upgrade fees, and huge licensing costs. Morgan owns the source, and has the staff to maintain and enhace their IP. In fact, a friend of mine at Morgan told me that, if they wanted to, Morgan could take CPS and give Tibco a run for their money.

©2006 Marc Adler - All Rights Reserved

©2006 Marc Adler - All Rights Reserved

Sunday, December 03, 2006

CAB, EventBroker, and Wildcards

I will be blogging about the CAB EventBroker soon. But, I think that I like mine (previously published here) better. I would like to see wildcard support in the EventBroker's subscription strings.

In CAB, you can define an event to be published like this:

[EventPublication("event://Trade/Update", PublicationScope.Global)]

public event EventHandler TradeUpdated;

........

public void TradeIsUpdated(Trade trade)

{

if (this.TradeUpdated != null)

{

this.TradeUpdated(this, new InstrumentUpdatedEventArgs(trade));

}

}

In some other module, you can subscribe to an event like this:

[EventSubscription("event://Trade/Update")

public void OnTradeUpdated(object sender, InstrumentUpdatedEventArgs e)

{

}

I need wildcards. I might like to have a function that gets called when any operation happens to a Trade object. So, I would like to see subscription topics like these:

"event://Trade/*"

or

"event://Trade

Both of these would cover the case when any operation happens to a trade. The subscription string would catch the following topic:

event://Trade/Updated

event://Trade/Deleted

event://Trade/Created

I need Tibco-lite as my internal message bus.

©2006 Marc Adler - All Rights Reserved

In CAB, you can define an event to be published like this:

[EventPublication("event://Trade/Update", PublicationScope.Global)]

public event EventHandler

........

public void TradeIsUpdated(Trade trade)

{

if (this.TradeUpdated != null)

{

this.TradeUpdated(this, new InstrumentUpdatedEventArgs(trade));

}

}

In some other module, you can subscribe to an event like this:

[EventSubscription("event://Trade/Update")

public void OnTradeUpdated(object sender, InstrumentUpdatedEventArgs e)

{

}

I need wildcards. I might like to have a function that gets called when any operation happens to a Trade object. So, I would like to see subscription topics like these:

"event://Trade/*"

or

"event://Trade

Both of these would cover the case when any operation happens to a trade. The subscription string would catch the following topic:

event://Trade/Updated

event://Trade/Deleted

event://Trade/Created

I need Tibco-lite as my internal message bus.

©2006 Marc Adler - All Rights Reserved

Saturday, December 02, 2006

CAB and Status Bars?

I have not gotten into the Smart Client Factory yet (preferring to learn the underlying CAB framework), so this question might be answered by the SCSF .... but has anyone made a UIExtensionSite for a statusbar object yet?

©2006 Marc Adler - All Rights Reserved

©2006 Marc Adler - All Rights Reserved

Grid Computing and UBS

Grid computing webcast here, featuring people from UBS, Microsoft and Digipede.

Our head quant tells us that UBS has an internal website that can price exotics amazingly fast. Something that all of us can strive for...

©2006 Marc Adler - All Rights Reserved

Our head quant tells us that UBS has an internal website that can price exotics amazingly fast. Something that all of us can strive for...

©2006 Marc Adler - All Rights Reserved

Thursday, November 30, 2006

CAB and WorkItems

WorkItems

A WorkItem is considered to represent a “use case” in CAB terminology. Ignore this. It is really just a container of other kinds of objects along with some state information.

A CAB application has a tree of WorkItems. The main CabApplication class contains a reference to the root WorkItem, which is referred to by the RootWorkItem property. Given a WorkItem, you can go up one level to its ParentWorkItem, or down to the next level by accessing the workItem.WorkItems collection.

Recall that the main application class is defined like this:

class CABQuoteViewerApplication : FormShellApplication

The first argument in the generic’s argument list is the type of WorkItem that will be the RootWorkItem of our entire application. All other WorkItems will be descendants of this RootWorkItem. A WorkItem has access to any of its descendant’s properties; however, sibling WorkItems cannot access eachother’s properties directly.

A WorkItem also contains various other collections. It has collections of:

You can Activate/Deactivate a WorkItem, Terminate it, and persist it (Save and Load).

There is a virtual function called OnRunStarted() that you can override in order to create views, read data, etc.

WorkItemExtensions

A WorkItemExtension is a way of extending the behavior of a WorkItem without having to change the WorkItem’s code nor resorting to subclassing the WorkItem. It is a class that just receives certain events that happen to the associated WorkItem. These events are:

Initialized

RunStarted

Activated

Deactivated

Terminated

To extend a WorkItem, you need to create a new subclass of WorkItemExtension. Then you need to create an object of that class; this object is associated with an underlying WorkItem. Then you just handle certain events that happen to the WorkItem.

And, to use this new WorkItemExtension, you would do the following:

©2006 Marc Adler - All Rights Reserved

A WorkItem is considered to represent a “use case” in CAB terminology. Ignore this. It is really just a container of other kinds of objects along with some state information.

A CAB application has a tree of WorkItems. The main CabApplication class contains a reference to the root WorkItem, which is referred to by the RootWorkItem property. Given a WorkItem, you can go up one level to its ParentWorkItem, or down to the next level by accessing the workItem.WorkItems collection.

Recall that the main application class is defined like this:

class CABQuoteViewerApplication : FormShellApplication

The first argument in the generic’s argument list is the type of WorkItem that will be the RootWorkItem of our entire application. All other WorkItems will be descendants of this RootWorkItem. A WorkItem has access to any of its descendant’s properties; however, sibling WorkItems cannot access eachother’s properties directly.

A WorkItem also contains various other collections. It has collections of:

- Workspaces

- SmartParts

- Commands

- EventTopics

- Services

- Items (you can stick any object in this collection, including state, views, etc)

You can Activate/Deactivate a WorkItem, Terminate it, and persist it (Save and Load).

There is a virtual function called OnRunStarted() that you can override in order to create views, read data, etc.

WorkItemExtensions

A WorkItemExtension is a way of extending the behavior of a WorkItem without having to change the WorkItem’s code nor resorting to subclassing the WorkItem. It is a class that just receives certain events that happen to the associated WorkItem. These events are:

Initialized

RunStarted

Activated

Deactivated

Terminated

To extend a WorkItem, you need to create a new subclass of WorkItemExtension. Then you need to create an object of that class; this object is associated with an underlying WorkItem. Then you just handle certain events that happen to the WorkItem.

public class QuoteViewWorkItemExtension : WorkItemExtension

{

public QuoteViewWorkItemExtension() {}

protected override OnActivated()

{

PerformanceTimer.StartPerformanceTiming();

}

protected override OnDeactivated()

{

PerformanceTimer.StopPerformanceTiming();

}

}

And, to use this new WorkItemExtension, you would do the following:

QuoteViewWorkItemExtension wix = new QuoteViewWorkItemExtension();

Wix.Initialize(myWorkItem);

©2006 Marc Adler - All Rights Reserved

CAB and Workspaces

Workspaces

You might be familiar with various kinds of layout managers that automatically arrange the windows that the manager contains. If you are a Java developer, you might be used to layout managers like the FlowLayout manager and the GridLayout manager. The layout manager works in conjunction with a container. The container holds the controls, and the layout manager positions and sizes the controls as they are added to the container.

In CAB, we have Workspaces and SmartParts. A Workspace is a container for holding SmartParts. A WorkItem contains a list of zero or more Workspaces, so you can have workspaces within workspaces.

Most CAB applications will need to create a root Workspace within the main form.

The different kinds of Workspaces in CAB are:

There are two ways of adding zones. One is to use the Visual Studio .Net designer, and drag a workspace from the Toolbox onto a form.

The other way is to dynamically create the workspace in the FormShellApplication’s AfterShellCreate() override.

Here is an example of creating various types of workspaces using the second method (the code is “unwound” for the sake of this article):

When you add a SmartPart to a Workspace, you can pass along hints that tell the Workspace how to layout and decorate the SmartPart. The Workspace class has functions for

You might be familiar with various kinds of layout managers that automatically arrange the windows that the manager contains. If you are a Java developer, you might be used to layout managers like the FlowLayout manager and the GridLayout manager. The layout manager works in conjunction with a container. The container holds the controls, and the layout manager positions and sizes the controls as they are added to the container.

In CAB, we have Workspaces and SmartParts. A Workspace is a container for holding SmartParts. A WorkItem contains a list of zero or more Workspaces, so you can have workspaces within workspaces.

Most CAB applications will need to create a root Workspace within the main form.

The different kinds of Workspaces in CAB are:

- WindowWorkspace

Vanilla area for holding SmartParts

For an MdiWorkspace, will automatically create a Form to hold a SmartPart - DeckWorkspace

Stacks SmartParts in an overlapping manner - MdiWorkspace

Regular MDI container, derives from WindowWorkspace - TabWorkspace

Tabbed Windows - ZoneWorkspace

Allows tiling of window areas, good for implementing an Outlook type layout

There are two ways of adding zones. One is to use the Visual Studio .Net designer, and drag a workspace from the Toolbox onto a form.

The other way is to dynamically create the workspace in the FormShellApplication’s AfterShellCreate() override.

Here is an example of creating various types of workspaces using the second method (the code is “unwound” for the sake of this article):

using System;

using System.Windows.Forms;

using CABQuoteViewer.WorkItems;

using Microsoft.Practices.CompositeUI.SmartParts;

using Microsoft.Practices.CompositeUI.WinForms;

namespace CABQuoteViewer

{

class CABQuoteViewerApplication : FormShellApplication<QuoteViewerWorkItem, MainForm>

{

private IWorkspace m_workspace;

private QuoteViewerWorkItemExtension m_quoteWorkItemExt;

/// <summary>

/// The main entry point for the application.

/// </summary>

[STAThread]

static void Main()

{

new CABQuoteViewerApplication().Run();

}

protected override void BeforeShellCreated()

{

base.BeforeShellCreated();

if (this.RootWorkItem != null)

{

this.m_quoteWorkItemExt = new QuoteViewerWorkItemExtension();

this.m_quoteWorkItemExt.Initialize(this.RootWorkItem);

}

}

protected override void AfterShellCreated()

{

base.AfterShellCreated();

this.CreateWorkspace("Deck");

}

private void CreateWorkspace(string wsTypeName)

{

if (wsTypeName == "Mdi")

{

this.m_workspace = new MdiWorkspace(this.Shell);

this.RootWorkItem.Workspaces.Add(this.m_workspace, "ClientWorkspace");

}

else if (wsTypeName == "Tab")

{

this.m_workspace = this.RootWorkItem.Workspaces.AddNew<TabWorkspace>("ClientWorkspace");

TabWorkspace tabWorkspace = this.m_workspace as TabWorkspace;

tabWorkspace.Dock = DockStyle.Fill;

this.Shell.Controls.Add(tabWorkspace);

}

else if (wsTypeName == "Deck")

{

this.m_workspace = this.RootWorkItem.Workspaces.AddNew<DeckWorkspace>("ClientWorkspace");

DeckWorkspace deckWorkspace = this.m_workspace as DeckWorkspace;

deckWorkspace.Dock = DockStyle.Fill;

this.Shell.Controls.Add(deckWorkspace);

}

else if (wsTypeName == "Zone")

{

this.m_workspace = this.RootWorkItem.Workspaces.AddNew<ZoneWorkspace>("ClientWorkspace");

ZoneWorkspace zoneWorkspace = this.m_workspace as ZoneWorkspace;

zoneWorkspace.Dock = DockStyle.Fill;

this.Shell.Controls.Add(zoneWorkspace);

}

else

{

throw new Exception("Cannot create workspace");

}

}

}

}

When you add a SmartPart to a Workspace, you can pass along hints that tell the Workspace how to layout and decorate the SmartPart. The Workspace class has functions for

- Showing a SmartPart (which also adds the SmartPart to the Workspace as well)

- Hiding a SmartPart

- Activating a SmartPart

- Closing a SmartPart

There are events that get fired when a SmartPart is activated within a Workspace, and when a SmartPart is closing within a Workspace.

You can create new, custom workspaces in CAB, and a later article will cover this.

©2006 Marc Adler - All Rights Reserved

The CAB Application Class Hierarchy

CAB Application Classes

The hierarchy of the CABApplication classes is shown in the diagram below. The top three classes should never be derived from. In almost all classes, your WinForms-based application will derive from FormShellApplication.

A CAB Application will be driven by the base CabApplication class. This class does most of the work to get the CAB application started.

The only field that the CabApplication class has is the root WorkItem, which as the name implies, is the root node of the entire application’s WorkItem tree. I will discuss WorkItems later.

The constructor of CabApplication does nothing; it is the Run() method (which is called by your application’s Main() function) that bootstraps the entire application. The Run() method will do the following:

The Derived Application Classes

The CabShellApplication class extends the CabApplication by

The FormShellApplication class merely overrides the Start() method and calls the familiar WinForms method Application.Run(mainform).

©2006 Marc Adler - All Rights Reserved

The hierarchy of the CABApplication classes is shown in the diagram below. The top three classes should never be derived from. In almost all classes, your WinForms-based application will derive from FormShellApplication.

A CAB Application will be driven by the base CabApplication class. This class does most of the work to get the CAB application started.

The only field that the CabApplication class has is the root WorkItem, which as the name implies, is the root node of the entire application’s WorkItem tree. I will discuss WorkItems later.

The constructor of CabApplication does nothing; it is the Run() method (which is called by your application’s Main() function) that bootstraps the entire application. The Run() method will do the following:

- Create the pre-defined services

- Authenticate the user.

- Reads the config file (ProfileCatalog.xml) that contains a list all of the plug-in modules that the app wants to load.

Each module can have an optional list of Roles. The module will be loaded only if the current user belongs to that role. If a module has no role information associated with it, then the module will be loaded. - Creates the Shell (ie: the MainForm)

- Loads all of the modules that are permitted to be loaded.

In each class in the module that derived from the ModuleInit class, all fields that are tagged with the [ServiceDependency] attribute are created.

All classes tagged with the [Service] attribute are added to the application’s list of services. - RootWorkItem.Run() is called for the applications root WorkItem.

- In the FormShellApplication class, the WinForm’s method Application.Run() is called to start the WinForm app.

The Derived Application Classes

The CabShellApplication class extends the CabApplication by

- Keeping a reference to the Shell (our MainForm) by adding it to the RootWorkItem’s list of items.

- Adding BeforeShellCreated and AfterShellCreated virtual methods. These methods can be overridden in your application class.

- Using a WindowsForm Visualizer

- Adding some UI and command-routine services

The FormShellApplication class merely overrides the Start() method and calls the familiar WinForms method Application.Run(mainform).

©2006 Marc Adler - All Rights Reserved

A Minimal CAB Application

First CAB Application

1) Create a new Windows Form Application solution named CABQuoteViewer.

a. Change the name of the form to MainForm.

2) Add the existing CAB projects to the solution.

a. Browse to C:\Program Files\Microsoft Composite UI App Block\CSharp\Source

b. Add the existing projects for ObjectBuilder, CompositeUI, and CompositeUI.WinForms.

c. In the CABQuoteViewer project, add references to the above 3 projects.

3) Rename the file Program.cs to CABQuoteViewerApplication.cs

4) In the file CABQuoteViewerApplication.cs,

a. Add references to the CAB namespace

using Microsoft.Practices.CompositeUI;

using Microsoft.Practices.CompositeUI.WinForms;

b. Change the definition of the class from

static class Program

to

class QuoteViewerApplication : FormShellApplication

c. The body of the Main() function should just be

new QuoteViewerApplication().Run();

The final version of CABQuoteViewerApplication.cs is:

using System;

using Microsoft.Practices.CompositeUI;

using Microsoft.Practices.CompositeUI.WinForms;

namespace CABQuoteViewer

{

class CABQuoteViewerApplication : FormShellApplication

{

[STAThread]

static void Main()

{

new CABQuoteViewerApplication().Run();

}

}

}

After compiling and running this application, you will see the empty MainForm appear.

©2006 Marc Adler - All Rights Reserved

1) Create a new Windows Form Application solution named CABQuoteViewer.

a. Change the name of the form to MainForm.

2) Add the existing CAB projects to the solution.

a. Browse to C:\Program Files\Microsoft Composite UI App Block\CSharp\Source

b. Add the existing projects for ObjectBuilder, CompositeUI, and CompositeUI.WinForms.

c. In the CABQuoteViewer project, add references to the above 3 projects.

3) Rename the file Program.cs to CABQuoteViewerApplication.cs

4) In the file CABQuoteViewerApplication.cs,

a. Add references to the CAB namespace

using Microsoft.Practices.CompositeUI;

using Microsoft.Practices.CompositeUI.WinForms;

b. Change the definition of the class from

static class Program

to

class QuoteViewerApplication : FormShellApplication

c. The body of the Main() function should just be

new QuoteViewerApplication().Run();

The final version of CABQuoteViewerApplication.cs is:

using System;

using Microsoft.Practices.CompositeUI;

using Microsoft.Practices.CompositeUI.WinForms;

namespace CABQuoteViewer

{

class CABQuoteViewerApplication : FormShellApplication

{

[STAThread]

static void Main()

{

new CABQuoteViewerApplication().Run();

}

}

}

After compiling and running this application, you will see the empty MainForm appear.

©2006 Marc Adler - All Rights Reserved

Tuesday, November 28, 2006

Getting Started with CAB

It looks like, for various reasons that shall accompany me to the grave, we will be bootstrapping the "top part" of our client-side framework with Microsoft's Composite Application Block. I am excited to finally be given the chance to learn CAB, and to lead the team doing the new client-side framework for my investment bank.

I will try to document my learning process with CAB so that I can save my successors some pain.

Installation of CAB and Accessories

Download and install the following files in order (make sure to install GAX before installing GAT):

1) Download Composite Application Block for C# (CAB)

2) Download Enterprise Library 2006 (EntLib)

3) Download the Guidance Automation Extensions (GAX)

4) Download the Guidance Automation Toolkit (GAT)

(At this point, before installing the Smart Client Software Factory, you must build CAB using the CompositeUI.sln solution file located in the directory C:\Program Files\Microsoft Composite UI App Block\CSharp. You must also close Visual Studio before installing SCSF.)

5) Download the Smart Client Software Factory (June 2006 version) (SCSF)

6) Download the CAB Hands-on Lab

7) Download the Intro to CAB document and the CAB help files (you might want to create shortcuts on the desktop for these files.)

8) Download the Sample Visualizations

If you have problems uninstalling GAX or GAT, read this.

Resources

· The CAB home on Microsoft Patterns and Practices area on GotDotNet

· CabPedia

· MSDN Magazine Article

· Getting Started with CAB on the Fear and Loathing blog

· Understanding CAB series on Szymon’s blog

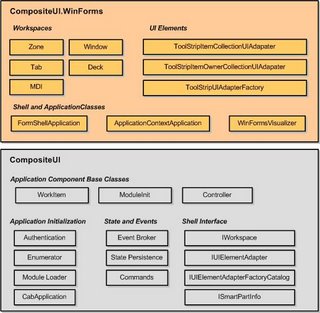

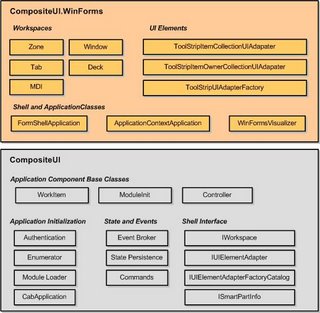

Class Hierarchy

Here is a diagram of the major classes in CAB:

©2006 Marc Adler - All Rights Reserved

I will try to document my learning process with CAB so that I can save my successors some pain.

Installation of CAB and Accessories

Download and install the following files in order (make sure to install GAX before installing GAT):

1) Download Composite Application Block for C# (CAB)

2) Download Enterprise Library 2006 (EntLib)

3) Download the Guidance Automation Extensions (GAX)

4) Download the Guidance Automation Toolkit (GAT)

(At this point, before installing the Smart Client Software Factory, you must build CAB using the CompositeUI.sln solution file located in the directory C:\Program Files\Microsoft Composite UI App Block\CSharp. You must also close Visual Studio before installing SCSF.)

5) Download the Smart Client Software Factory (June 2006 version) (SCSF)

6) Download the CAB Hands-on Lab

7) Download the Intro to CAB document and the CAB help files (you might want to create shortcuts on the desktop for these files.)

8) Download the Sample Visualizations

If you have problems uninstalling GAX or GAT, read this.

Resources

· The CAB home on Microsoft Patterns and Practices area on GotDotNet

· CabPedia

· MSDN Magazine Article

· Getting Started with CAB on the Fear and Loathing blog

· Understanding CAB series on Szymon’s blog

Class Hierarchy

Here is a diagram of the major classes in CAB:

©2006 Marc Adler - All Rights Reserved

Monday, November 27, 2006

Free Market Data

Free ECN real-time data from OpenTick.

Very cheap ($1) monthly fees for some other data.

They have APIs in various languages, including C# for .Net 2.0. This seems like a good way to test that async data handling part of a framework. Here is some example docs for one of their calbacks:

static void onQuote(OTQuote quote)

Description

Provides real-time and historical quotes.

Provides

OTQuote

©2006 Marc Adler - All Rights Reserved

Very cheap ($1) monthly fees for some other data.

They have APIs in various languages, including C# for .Net 2.0. This seems like a good way to test that async data handling part of a framework. Here is some example docs for one of their calbacks:

static void onQuote(OTQuote quote)

Description

Provides real-time and historical quotes.

Provides

OTQuote

©2006 Marc Adler - All Rights Reserved

Eclipse Trader

A colleague pointed out the open-source Eclipse Trader, built upon the Eclipse Rich Client Platform (RCP).

There exists people in my company whose number > 1 who would be most pleased if I would fully embrace Eclipse RCP.

©2006 Marc Adler - All Rights Reserved

There exists people in my company whose number > 1 who would be most pleased if I would fully embrace Eclipse RCP.

©2006 Marc Adler - All Rights Reserved

Downloading Yahoo Quotes

Here is a great page for composing URLs to get all sort of delayed quote data from Yahoo

©2006 Marc Adler - All Rights Reserved

©2006 Marc Adler - All Rights Reserved

Saturday, November 25, 2006

Windows Shutdown Crapfest

Via Joel, there is required reading on Microsoft here.

I am currently involved with Microsoft's MCS and DPE in my job. I know that many of the people that I interact with are fervent readers of both Joel and of Mini-Microsoft, and that they will eventually read the above-mentioned article.

What has happened to my beloved Microsoft? Thank the lord that we are engaging some of the most talented Microsoft partners on the planet.

©2006 Marc Adler - All Rights Reserved

I am currently involved with Microsoft's MCS and DPE in my job. I know that many of the people that I interact with are fervent readers of both Joel and of Mini-Microsoft, and that they will eventually read the above-mentioned article.

What has happened to my beloved Microsoft? Thank the lord that we are engaging some of the most talented Microsoft partners on the planet.

©2006 Marc Adler - All Rights Reserved

Friday, November 24, 2006

Object Cache Considerations (Part 3)

Distribution and Subscriptions

An out-of-process object cache should not only have a storage component, but a messaging system as well. One of the architects in my group, who is a well-known messaging guru, told me that the ideal object cache should have a state-of-the-art messaging system attached to it.

Our object caches should be distributed and subscribable.

A logical cache can be distributed amongst several different servers. We can do this for load balancing and for failover. Applications also have local caches that communicate with the master, distributed cache(s).

Let's say that we are storing information about each position that our company maintains. We might want to have 3 distributed caches, one that stores positions for our customers in the US, one for customers in Europe and the Middle East/Africa, and one for Asia. Upon startup, the master cache loader will read all of the positions from the database and will populate each of the three caches.

This is an example of a very simple load balancer for the distributed caches. Other load balancing schemes include partitioning the positions by the first digit of the position id, a date range, etc.

Each application that uses positions will have its own local cache. These local caches will usually contain a subset of the data that is in the master caches. For example, the US Derivatives Desk might just need to cache positions from US portfolios that have been active in the last 30 days.

When an application updates or deletes a position in one of the master caches, we need to update all of the other master caches that we are using for failover purposes, and any other master caches that contain that particular position. Similarly, when we create a new position, we need to propagate that new position to any redundant caches or any caches that might be interested in the new position.

We might need to push the new or updated position to any of the local caches that are interested in that position. We have a choice of architecture for distributing updates to local caches.

1) We do not distribute the updated object at all. An application won’t know that there is new data in the master cache until it retrieves that object again.

2) We push the update to the local caches right away. We can push out full objects or just the deltas (changes to the object).

There are disadvantages of both schemes. Under scheme (1), the application could be working with old data. Under scheme (2), we could be updating an object in the local cache while the application is working on that same object. Also, under scheme (2), we now have to worry about messaging more.

The master caches have to have some way of communicating with the local caches. We can communicate with each application by one of the familiar messaging mechanisms; Tibco EMS, Tibco RV, LBM, Sockets, etc.

We need to make sure that the messaging is reliable. Each subscriber must receive the update of the object from the master cache. There is no tolerance for dropped messages. Otherwise, different applications might be working with different versions of an object.

We do not have to make the message durable. In other words, if a client goes offline for a while, then the messaging part of the cache does not have to save the update until a time where that client decided to reconnect. So, this saves us the need of storing out-of-date messages.

Using a JMS-based messaging scheme also means that we can use JMS Selectors to filter out objects that an application is not interested in. Selectors have overhead with them, but it is easy to set up a filter-based pub/sub mechanism between the master caches and any local caches. For example, one application might only be interested in updates to position objects whose position id starts with the prefix “A23”. It is easy to set up a JMS selector that has the pattern “positioned LIKE ‘A23%’”.

©2006 Marc Adler - All Rights Reserved

An out-of-process object cache should not only have a storage component, but a messaging system as well. One of the architects in my group, who is a well-known messaging guru, told me that the ideal object cache should have a state-of-the-art messaging system attached to it.

Our object caches should be distributed and subscribable.

A logical cache can be distributed amongst several different servers. We can do this for load balancing and for failover. Applications also have local caches that communicate with the master, distributed cache(s).

Let's say that we are storing information about each position that our company maintains. We might want to have 3 distributed caches, one that stores positions for our customers in the US, one for customers in Europe and the Middle East/Africa, and one for Asia. Upon startup, the master cache loader will read all of the positions from the database and will populate each of the three caches.

This is an example of a very simple load balancer for the distributed caches. Other load balancing schemes include partitioning the positions by the first digit of the position id, a date range, etc.

Each application that uses positions will have its own local cache. These local caches will usually contain a subset of the data that is in the master caches. For example, the US Derivatives Desk might just need to cache positions from US portfolios that have been active in the last 30 days.

When an application updates or deletes a position in one of the master caches, we need to update all of the other master caches that we are using for failover purposes, and any other master caches that contain that particular position. Similarly, when we create a new position, we need to propagate that new position to any redundant caches or any caches that might be interested in the new position.

We might need to push the new or updated position to any of the local caches that are interested in that position. We have a choice of architecture for distributing updates to local caches.

1) We do not distribute the updated object at all. An application won’t know that there is new data in the master cache until it retrieves that object again.

2) We push the update to the local caches right away. We can push out full objects or just the deltas (changes to the object).

There are disadvantages of both schemes. Under scheme (1), the application could be working with old data. Under scheme (2), we could be updating an object in the local cache while the application is working on that same object. Also, under scheme (2), we now have to worry about messaging more.

The master caches have to have some way of communicating with the local caches. We can communicate with each application by one of the familiar messaging mechanisms; Tibco EMS, Tibco RV, LBM, Sockets, etc.

We need to make sure that the messaging is reliable. Each subscriber must receive the update of the object from the master cache. There is no tolerance for dropped messages. Otherwise, different applications might be working with different versions of an object.

We do not have to make the message durable. In other words, if a client goes offline for a while, then the messaging part of the cache does not have to save the update until a time where that client decided to reconnect. So, this saves us the need of storing out-of-date messages.

Using a JMS-based messaging scheme also means that we can use JMS Selectors to filter out objects that an application is not interested in. Selectors have overhead with them, but it is easy to set up a filter-based pub/sub mechanism between the master caches and any local caches. For example, one application might only be interested in updates to position objects whose position id starts with the prefix “A23”. It is easy to set up a JMS selector that has the pattern “positioned LIKE ‘A23%’”.

©2006 Marc Adler - All Rights Reserved

Sunday, November 19, 2006

So long Mike

Our friend Mike was a 25-year veteran of the Financial Service practice of a very large consulting firm. He was just informed that he was to be dismissed, on the basis of not making his (unrealistic) sales quota. The new management of the division and Mike did not agree on things, and the easy way to get rid of a person is to set him up for failure.

Mike will easily find a new position, as he is known and respected in the financial services area. And, opportunity is also borne out of adversity.

Know the warning signs when you are being set up to fail. Miike saw them a mile away, and was prepared.

©2006 Marc Adler - All Rights Reserved

Mike will easily find a new position, as he is known and respected in the financial services area. And, opportunity is also borne out of adversity.

Know the warning signs when you are being set up to fail. Miike saw them a mile away, and was prepared.

©2006 Marc Adler - All Rights Reserved

SQl Server 2005 Performance Tips

Here

Good performance hints if you are doing a lot of OLTP processing. Most of the tips will work for Sybase as well.

It is remarkable what performance improvements you can make in an OLTP system if you hire a true database tuning expert to go over your systems with a fine-tooted comb. Unfortunately, the tuning does not usually extend into refactoring the data model. This is because a lot of apps touch the databases, and you would then have to go in and start refactoring app code.

SQL Server 2005 comes with new, easy-to-use profiling tools, and I encourage you to take advantage of them as you are developing new apps. If you are a dev manager, try to get funding for two weeks of a SQL Server 2005 expert's time once your data model is written.

In addition, try to get your SANs tuned correctly, and optimize the interaction between frequenty-used database indexes and the spindles.

How about hardware acceleration, like Solid State Disks?

Kudos go out to this site, completely devoted to SQL Server performance tuning.

Final word - if you have a mission-critical application that involves heavy database access, spend the bucks and hire a DB architect who knows how to tune databases like a 1964 Fender Strat.

©2006 Marc Adler - All Rights Reserved

Good performance hints if you are doing a lot of OLTP processing. Most of the tips will work for Sybase as well.

It is remarkable what performance improvements you can make in an OLTP system if you hire a true database tuning expert to go over your systems with a fine-tooted comb. Unfortunately, the tuning does not usually extend into refactoring the data model. This is because a lot of apps touch the databases, and you would then have to go in and start refactoring app code.

SQL Server 2005 comes with new, easy-to-use profiling tools, and I encourage you to take advantage of them as you are developing new apps. If you are a dev manager, try to get funding for two weeks of a SQL Server 2005 expert's time once your data model is written.

In addition, try to get your SANs tuned correctly, and optimize the interaction between frequenty-used database indexes and the spindles.

How about hardware acceleration, like Solid State Disks?

Kudos go out to this site, completely devoted to SQL Server performance tuning.

Final word - if you have a mission-critical application that involves heavy database access, spend the bucks and hire a DB architect who knows how to tune databases like a 1964 Fender Strat.

©2006 Marc Adler - All Rights Reserved

Sunday, November 12, 2006

Object Cache Considerations (Part 2)

Object Versioning

You can consider the ‘version’ of an object to be two different things.

In the first case, the ‘version’ of an object could represent the number of times a particular object has been written to. When an object is first created, its version number is set to 1, and then each time a client updates the object, the version number is increased. So, we can have an API call is our object cache that tests to see if we are holding on to the most recent version:

if (!ObjectCache.IsCurrent(object))

object = ObjectCache.Get(object.Key);

or, if we are using a object that has a proxy to the cache, we can do something like this:

if (!object.IsCurrent())

object.Refresh();

In the second (and substantially more complex) case, the ‘version’ can represent the actual layout or shape of an object’s class. Consider a Trade object:

public class Trade : CachedObject

{

public int SecurityId;

public double Price;

}

This would be version 1.0 of the class.

Let’s say that we have a trading system that has to run 24x7, as is the current rage. Systems that run 24x7 theoretically have no chance to be bounced. Even a system that runs 24x6.5 has a window for maintenance. Our trading system has version 1.0 of the Trade object.

Now let’s say that we have a request to add sales attribution to the trading system, so now we need to add the id of the sales trader that took the trade request.

public class Trade : CachedObject

{

public int SecurityId;

public double Price;

public int BrokerId;

}

This is now version 1.1 of the class.

Our object cache holds version 1.0 objects, and all of our subscribers also hold version 1.0 objects. But, now let’s say that the system that writes new trades into the object cache now has to write version 1.1 objects. What do we do?

There are several things to consider here. How do we represent the object in the cache? Because we are using name/value pairs, all new objects will just have the BrokerId/ field added. The old 1.0 objects that are in the cache do not have to change.

The object cache might want to broadcast a message to all subscribers, telling them that the version number of the Trade object has changed. Since the subscribers may be systems that must run 24x7, then the systems might not be able to be bounced in order to rebuild their trade caches. The systems must be able to read and write the new version 1.1 objects as well as continue to support the older 1.0 objects. But, we cannot reconfigure the layout of the objects dynamically, can we?

Instead of using C# objects, we might consider using dictionaries of dictionaries to represent an app’s object cache. But this is a different kind of programming model. Instead of coding:

Trade obj = ObjectCache.Get(“102374”);

int broker = obj.BrokerId;

We might have to do the following (taking advantage of C# 2.0’s nullable types) :

TradeDictionaryObject obj = ObjectCache.Get(“1002374”);

int? broker = obj.GetInt(“BrokerId”);

What a mess!

What does this tell us? When using an object cache for a 24x7 system, make sure you get your class definition right the first time, and avoid object versioning!

©2006 Marc Adler - All Rights Reserved

You can consider the ‘version’ of an object to be two different things.

In the first case, the ‘version’ of an object could represent the number of times a particular object has been written to. When an object is first created, its version number is set to 1, and then each time a client updates the object, the version number is increased. So, we can have an API call is our object cache that tests to see if we are holding on to the most recent version:

if (!ObjectCache.IsCurrent(object))

object = ObjectCache.Get(object.Key);

or, if we are using a object that has a proxy to the cache, we can do something like this:

if (!object.IsCurrent())

object.Refresh();

In the second (and substantially more complex) case, the ‘version’ can represent the actual layout or shape of an object’s class. Consider a Trade object:

public class Trade : CachedObject

{

public int SecurityId;

public double Price;

}

This would be version 1.0 of the class.

Let’s say that we have a trading system that has to run 24x7, as is the current rage. Systems that run 24x7 theoretically have no chance to be bounced. Even a system that runs 24x6.5 has a window for maintenance. Our trading system has version 1.0 of the Trade object.

Now let’s say that we have a request to add sales attribution to the trading system, so now we need to add the id of the sales trader that took the trade request.

public class Trade : CachedObject

{

public int SecurityId;

public double Price;

public int BrokerId;

}

This is now version 1.1 of the class.

Our object cache holds version 1.0 objects, and all of our subscribers also hold version 1.0 objects. But, now let’s say that the system that writes new trades into the object cache now has to write version 1.1 objects. What do we do?

There are several things to consider here. How do we represent the object in the cache? Because we are using name/value pairs, all new objects will just have the BrokerId/

The object cache might want to broadcast a message to all subscribers, telling them that the version number of the Trade object has changed. Since the subscribers may be systems that must run 24x7, then the systems might not be able to be bounced in order to rebuild their trade caches. The systems must be able to read and write the new version 1.1 objects as well as continue to support the older 1.0 objects. But, we cannot reconfigure the layout of the objects dynamically, can we?

Instead of using C# objects, we might consider using dictionaries of dictionaries to represent an app’s object cache. But this is a different kind of programming model. Instead of coding:

Trade obj = ObjectCache.Get(“102374”);

int broker = obj.BrokerId;

We might have to do the following (taking advantage of C# 2.0’s nullable types) :

TradeDictionaryObject obj = ObjectCache.Get(“1002374”);

int? broker = obj.GetInt(“BrokerId”);

What a mess!

What does this tell us? When using an object cache for a 24x7 system, make sure you get your class definition right the first time, and avoid object versioning!

©2006 Marc Adler - All Rights Reserved

Considerations for an Object Cache (Part 1)

Let’s say that we wanted to write our own multi-platform, distributed, subscription-based object cache. What would we need to do to write the ultimate object cache?

Let’s consider a variety of issues that we would have to consider when writing caching middleware. I am sure that vendors like Gemstone have gone through this exercise already, but why not go through it again!

Multiplatform support

Most Investment Banks have a combination of C++ (both Win32 and Unix), C#/.Net and Java (both Win32 and Unix) applications. It is common to have a .Net front-end talking to a Java server, which in turn, communicates to a C++-based pricing engine. We need to be able to represent the object data is some sort of form that can be easily accessed by applications in all of the various platforms.

The most universal representation would be to represent the object as pure text, and to send it across the wire as text. What kind of text representation would we use?

XML – quasi-universal. We would have to ensure that XML written by one system is readable by other systems. XML serialization is well-known between Java and C# apps, but what about older C++ apps. For C++, would we use Xerces? Also, there is the cost of serialization and deserialization, not to mention the amount of data that is sent over the wire.

Name/Values Pairs – easy to generate and parse. Same costs as XML. We would have to write our own serialization and deserialization schemes for each system. How about complex, hierarchical data? Can simple name/value pairs represent complex data efficiently? Or would we just end up rewriting the XML spec?

Instead of text, we can store objects as true binary objects. What kind of binary object do we store? Native or system-agnostic? If you have a variety of platforms writing into the object cache, do we store the object in the binary format of the system that created the object, or do we pick one platform and use that as master?

Master Format – We pick one format, either C++, C#, or Java binary objects. We would need a series of adapters to transform between binary formats. We would also need an indication of the platform that is doing the reading or writing. Let’s say that we were to store all objects as binary Java objects. If a Java app reads an object, then there would be no costs associated with object transformation, so we can just send a binary Java object down the wire (although we may have to worry about differences between the various versions of Java … can a Java 1.5 object with Java 1.5-specific types or classes be read by a Java 1.4 app?). If a C# app wants to read the Java object, then we must perform some translation. (Do we use something like CodeMesh to do this?) We also need to ensure that the adaptors can support all of the features of the various languages. For example, let’s say that Java came up with a new data type that C# did not support … would we try to find some representation of that type in C#, or would we just not translate that particular data type?

Native Format – We store pure binary objects, without regards to the system that is reading or writing the object. There is no translation layer. Apps are responsible for doing translation themselves. This is the fastest, most efficient way of storing objects. However, different teams might end up writing their own versions of the translation layer.

What other factors might we consider when choosing a native object format?

How about deltas in subscriptions? If we are storing large objects, then we might only want to broadcast changes to the object instead of resending the entire object. Delta transmission favors sending the changes out in text, and we can save the cost of translating the binary into text if we were just to store the objects as text. And, in this case, name/value pairs are favored.

Large sets of name/value pairs can be compressed if necessary, but we have to consider the time needed to compress and decompress.

Can our object cache store both text and binary? Sure, why not. We can tag a cache region as supporting binary or text, and have appropriate plugins for various operations on each.

As always, comments are welcome.

©2006 Marc Adler - All Rights Reserved

Let’s consider a variety of issues that we would have to consider when writing caching middleware. I am sure that vendors like Gemstone have gone through this exercise already, but why not go through it again!

Multiplatform support

Most Investment Banks have a combination of C++ (both Win32 and Unix), C#/.Net and Java (both Win32 and Unix) applications. It is common to have a .Net front-end talking to a Java server, which in turn, communicates to a C++-based pricing engine. We need to be able to represent the object data is some sort of form that can be easily accessed by applications in all of the various platforms.

The most universal representation would be to represent the object as pure text, and to send it across the wire as text. What kind of text representation would we use?

XML – quasi-universal. We would have to ensure that XML written by one system is readable by other systems. XML serialization is well-known between Java and C# apps, but what about older C++ apps. For C++, would we use Xerces? Also, there is the cost of serialization and deserialization, not to mention the amount of data that is sent over the wire.

Name/Values Pairs – easy to generate and parse. Same costs as XML. We would have to write our own serialization and deserialization schemes for each system. How about complex, hierarchical data? Can simple name/value pairs represent complex data efficiently? Or would we just end up rewriting the XML spec?

Instead of text, we can store objects as true binary objects. What kind of binary object do we store? Native or system-agnostic? If you have a variety of platforms writing into the object cache, do we store the object in the binary format of the system that created the object, or do we pick one platform and use that as master?

Master Format – We pick one format, either C++, C#, or Java binary objects. We would need a series of adapters to transform between binary formats. We would also need an indication of the platform that is doing the reading or writing. Let’s say that we were to store all objects as binary Java objects. If a Java app reads an object, then there would be no costs associated with object transformation, so we can just send a binary Java object down the wire (although we may have to worry about differences between the various versions of Java … can a Java 1.5 object with Java 1.5-specific types or classes be read by a Java 1.4 app?). If a C# app wants to read the Java object, then we must perform some translation. (Do we use something like CodeMesh to do this?) We also need to ensure that the adaptors can support all of the features of the various languages. For example, let’s say that Java came up with a new data type that C# did not support … would we try to find some representation of that type in C#, or would we just not translate that particular data type?

Native Format – We store pure binary objects, without regards to the system that is reading or writing the object. There is no translation layer. Apps are responsible for doing translation themselves. This is the fastest, most efficient way of storing objects. However, different teams might end up writing their own versions of the translation layer.

What other factors might we consider when choosing a native object format?

How about deltas in subscriptions? If we are storing large objects, then we might only want to broadcast changes to the object instead of resending the entire object. Delta transmission favors sending the changes out in text, and we can save the cost of translating the binary into text if we were just to store the objects as text. And, in this case, name/value pairs are favored.

Large sets of name/value pairs can be compressed if necessary, but we have to consider the time needed to compress and decompress.

Can our object cache store both text and binary? Sure, why not. We can tag a cache region as supporting binary or text, and have appropriate plugins for various operations on each.

As always, comments are welcome.

©2006 Marc Adler - All Rights Reserved

Saturday, November 11, 2006

Tourist Warning : City Pride

While staying in Canary Wharf last week, a colleague from Microsoft and I went to the City Pride pub, which is one of the few pubs around the area, and close to the Hilton.

After sitting at a table for 15 minutes, and not being served, some kind soul told us that you actually has to go up to the bar to order your beers. (Warning #1). We Americans like to be coddled, and demand waitress service.

Then I ordered a Black and Tan (Guiness and Bass, standard fare at any Irish pub in New York). The bartender had no idea what I was talking about (Warning #2).

To top it off, the bartender gave me the bill, and said that we could feel free to add a tip onto it ... which I did (a 10%, 2 Pound tip) (Warning #3 ... I was told the next day never to tip the bartender).

I guess this was my Lost In Translation moment that every tourist has when visiting a foreign country .... even though Bush treats Britain as our 51st state. (Yo Blair!)

©2006 Marc Adler - All Rights Reserved

After sitting at a table for 15 minutes, and not being served, some kind soul told us that you actually has to go up to the bar to order your beers. (Warning #1). We Americans like to be coddled, and demand waitress service.

Then I ordered a Black and Tan (Guiness and Bass, standard fare at any Irish pub in New York). The bartender had no idea what I was talking about (Warning #2).

To top it off, the bartender gave me the bill, and said that we could feel free to add a tip onto it ... which I did (a 10%, 2 Pound tip) (Warning #3 ... I was told the next day never to tip the bartender).

I guess this was my Lost In Translation moment that every tourist has when visiting a foreign country .... even though Bush treats Britain as our 51st state. (Yo Blair!)

©2006 Marc Adler - All Rights Reserved

Wanted : JMX to .Net Bridge

We need a way for a .Net GUI to speak JMX to a Java server. Anyone come up with anything yet?

Doing a Google search, it looks like we are not the only ones with that need.

Has anyone checked out the WS-JMX Connector?

©2006 Marc Adler - All Rights Reserved

Doing a Google search, it looks like we are not the only ones with that need.

Has anyone checked out the WS-JMX Connector?

©2006 Marc Adler - All Rights Reserved

Office 2007 Compatibility Pack is available

If you are like me, and you keep getting these Office 2007 files sent to you by your local Microsoft reps, but you are only running Office 2003, then you need this

©2006 Marc Adler - All Rights Reserved

©2006 Marc Adler - All Rights Reserved

Wednesday, November 08, 2006

What does a Database Architect do?

Even though I was the very first developer on the Microsoft SQL Server Team, I have to admit that databases don't thrill me .... You have to have a special mindset to deal with databases all day, and to tell you the truth, my interests lie elsewhere. In fact, the sure way to get me to fail an interview is to ask me to write any moderately-complicated SQL query.

I firmly believe that, for major systems, the developers should not be allowed to design the data model, set up the databases, nor write the DDL. I have seen a number of instances in the past where systems that have had their database components designed by non-database experts have performed very poorly. Slow queries, no indexes, lock contention, etc. The best projects that I have been involved in have had a separate person just devoted to the database. If I am leading a major project, I will always have a dedicated db expert as part of the effort.

Let's say that we want to hire a database expert for our Architecture Team. What duties would they have?

1) Advise teams on best practices.

2) Come up with DDL coding standards.

3) Review existing systems and provide guidance on performance improvements.

4) Know the competitve landscape (Sybase vs SQL Server vs Oracle) and affect corporate standards.

5) Be expert at OLAP, MDX, Analysis Services, etc.

6) Know how to tune databases and hardware in order to provide optimal performance.

7) Advise all of the DBAs.

8) Monitor upcoming technologies, like Complex Event Processing, time-series databases, etc. Be familiar with KDB+, StreamBase, Vhayu, etc.

A Database Architect is a full-time job that I think that all Architecture groups should have a slot for.

Know anyone who wants to join us?

©2006 Marc Adler - All Rights Reserved

I firmly believe that, for major systems, the developers should not be allowed to design the data model, set up the databases, nor write the DDL. I have seen a number of instances in the past where systems that have had their database components designed by non-database experts have performed very poorly. Slow queries, no indexes, lock contention, etc. The best projects that I have been involved in have had a separate person just devoted to the database. If I am leading a major project, I will always have a dedicated db expert as part of the effort.

Let's say that we want to hire a database expert for our Architecture Team. What duties would they have?

1) Advise teams on best practices.

2) Come up with DDL coding standards.

3) Review existing systems and provide guidance on performance improvements.

4) Know the competitve landscape (Sybase vs SQL Server vs Oracle) and affect corporate standards.

5) Be expert at OLAP, MDX, Analysis Services, etc.

6) Know how to tune databases and hardware in order to provide optimal performance.

7) Advise all of the DBAs.

8) Monitor upcoming technologies, like Complex Event Processing, time-series databases, etc. Be familiar with KDB+, StreamBase, Vhayu, etc.

A Database Architect is a full-time job that I think that all Architecture groups should have a slot for.

Know anyone who wants to join us?

©2006 Marc Adler - All Rights Reserved

Monday, November 06, 2006

DrKW and Cross-Asset Trading

Dresdner folded its much-hyped Digital Markets division, which they liked to view as their "Bell Labs" of DrKW. All of the major players involved in the Digital Markets group have left or are in the process of leaving.

According to the DWT newsletter, there were several charters to the Digital Markets group:

1) Provide synergies across all lines-of-business at DrKW, and stop the siloing.

2) Provide a system for cross-asset class trading.

Eugene Grygo, the editor of the DWT newsletter, devoted his "Before the Spin" column to this news, and brought up some questions with regards to the future of DrKW. In particular, what will happen to the dream of cross-asset class trading? Grygo mentions that HSBC is actively exploring this space, and I know a few other IBs doing the same. Is it impossible to coalesce the silos and provide true cross-asset class trading? If it is technically feasible, then is it politically feasible?

In these times where everyone is predicting the reduction of traders due to automation, will cross-asset trading be the last field of battle as the silos struggle to maintain their autonomy?

I also wonder what becomes of DrKW's grid project. Maybe Matt or Deglan can illuminate us...

©2006 Marc Adler - All Rights Reserved

According to the DWT newsletter, there were several charters to the Digital Markets group:

1) Provide synergies across all lines-of-business at DrKW, and stop the siloing.

2) Provide a system for cross-asset class trading.

Eugene Grygo, the editor of the DWT newsletter, devoted his "Before the Spin" column to this news, and brought up some questions with regards to the future of DrKW. In particular, what will happen to the dream of cross-asset class trading? Grygo mentions that HSBC is actively exploring this space, and I know a few other IBs doing the same. Is it impossible to coalesce the silos and provide true cross-asset class trading? If it is technically feasible, then is it politically feasible?

In these times where everyone is predicting the reduction of traders due to automation, will cross-asset trading be the last field of battle as the silos struggle to maintain their autonomy?

I also wonder what becomes of DrKW's grid project. Maybe Matt or Deglan can illuminate us...

©2006 Marc Adler - All Rights Reserved

Sunday, November 05, 2006

Grid in Financial Markets

A presentation by JP Morgan on their use of Grid Computing.

Here is a page of PDF's from a February 2006 conference in Italy on Grid Computing in financial markets. There is even a paper on using grid for semantic analysis of financial news feeds. I need to get our London team to read some of this stuff.

There must be synergies between Complex Event Processing and Grids. Anyone looking at this space?

©2006 Marc Adler - All Rights Reserved

Here is a page of PDF's from a February 2006 conference in Italy on Grid Computing in financial markets. There is even a paper on using grid for semantic analysis of financial news feeds. I need to get our London team to read some of this stuff.

There must be synergies between Complex Event Processing and Grids. Anyone looking at this space?

©2006 Marc Adler - All Rights Reserved

Friday, October 27, 2006

Mini-Guide to .Net/Java Interop

Here

Terry is sure to have this stuff in our Wiki before I get to London :-)

©2006 Marc Adler - All Rights Reserved

Terry is sure to have this stuff in our Wiki before I get to London :-)

©2006 Marc Adler - All Rights Reserved

Pricing Turbo Warrants

Here

Today was the first I have ever heard about Turbo Warrants.

A Turbo Warrant call is:

- a barrier knock-out option paying a ...

- small rebate to the holder if the barrier is hit and

- with the barrier typically in-the-money.

©2006 Marc Adler - All Rights Reserved

Today was the first I have ever heard about Turbo Warrants.

A Turbo Warrant call is:

- a barrier knock-out option paying a ...

- small rebate to the holder if the barrier is hit and

- with the barrier typically in-the-money.

©2006 Marc Adler - All Rights Reserved

Thursday, October 26, 2006

The Bile Blog

The Bile Blog is even most cynical and caustic than I am.

Thanks to the ThoughtWorkers at my office for pointing this out. Especially funny are the posts that harpoon the Fowlbots. And, I think that he has even biled yours truly.

This blog is going to take me quite a while to go through ... would be great if Virgin Atlantic had internet connectivity ... I think reading this blog would occupy my entire flight.

By the way ... kudos to our own Fowlbots ... James, Chris, Dave and Alistair. Job well done, boys!

©2006 Marc Adler - All Rights Reserved

Thanks to the ThoughtWorkers at my office for pointing this out. Especially funny are the posts that harpoon the Fowlbots. And, I think that he has even biled yours truly.

This blog is going to take me quite a while to go through ... would be great if Virgin Atlantic had internet connectivity ... I think reading this blog would occupy my entire flight.

By the way ... kudos to our own Fowlbots ... James, Chris, Dave and Alistair. Job well done, boys!

©2006 Marc Adler - All Rights Reserved

C++/CLI Opposition

C++/CLI == C++ Divided By CLI

Here

Interesting reading, especially in the light that investment banks have a ton of old Visual C++/6, non-MFC code. The choice is to go to C++/CLI, or start fresh with C#.

©2006 Marc Adler - All Rights Reserved

Here

Interesting reading, especially in the light that investment banks have a ton of old Visual C++/6, non-MFC code. The choice is to go to C++/CLI, or start fresh with C#.

©2006 Marc Adler - All Rights Reserved

Wednesday, October 25, 2006

I am scared of The Wharf

Earlier this year, my former consulting company closed its London office without warning. In a mailing to the staff, the partners said that there was just no business to be had for very smart .Net and Java consultants in Canary Wharf.

I am imaging what kind of place this Wharf could be. When I get there, will I see a lone seaman, in a yellow raincoat, yelling "Ahoy Matey" to me from a distant pier? Are there thugs and holligans behind every dark corner, waiting to roll me for my wallet? Will I see row of cars, inhabited by hormonal teenagers, "watching the submarine races" (a popular saying in the 1950's)?

I am very afraid of this Wharf place. Perhaps JohnOS, Matt, Deglan and Pin can organize a bile-night to keep me off the streets? Perhaps Rut the Nut is reading this blog and will get some of our old Citicorp/EBS gang together for a slosh-up?

©2006 Marc Adler - All Rights Reserved

Tuesday, October 24, 2006

Funny Code

What is the funniest line of code you ever saw? One of the developers at Barcap wrote the following: line in a C# function

if (this == null)

{

}

It absolutely cracked me up (well... I guess you had to be there).

©2006 Marc Adler - All Rights Reserved

if (this == null)

{

}

It absolutely cracked me up (well... I guess you had to be there).

©2006 Marc Adler - All Rights Reserved

London Bound

I will be in London all next week, checking out the happenings in Canary Wharf. I have not been to London for a long time, so it will be interesting to see how built up the Wharf is. More interesting will be to see if they built any quality pubs. Last time I was in London, all of the pubs closed at 11 at night.

One nice thing will be to test our Virgin Atlantic's Business Class. I have been hearing tales of free massages .... hmmm ...

©2006 Marc Adler - All Rights Reserved

One nice thing will be to test our Virgin Atlantic's Business Class. I have been hearing tales of free massages .... hmmm ...

©2006 Marc Adler - All Rights Reserved

Sunday, October 22, 2006

Separated at Birth

Chris and I came from the same company, and we were both equally dissatisfied with the kind of body shop work that we were doing at our last client. We both struck out simultaneously to find a place where we could do meaningful work. We landed in very similar positions at two of the largest investment banks in the world.

Funnier still is that we share almost the same technology stack, from front to back. I am sure that right after the conference call that we have with our market data infrastructure vendor, Chris is on the same call an hour later.

Craig mentions that there are only about 200 market data specialists in the whole world. Everyone probably knows what eachother is doing. The same thing goes on in Wall Street, and by extension, the City. The after-bonus-shuffle will take place in another 4 months, and it's an opportunity for every company to find out what every other company is doing.

When you come down to it, we all have very similar technology stacks. We all know which vendors are out there, and we all have done the same kind of performance and stressing comparisons between similar vendors. The goal is to squeeze that one extra millisecond of performance so that your order is hit before your competitor's order. It could be the difference of one extra 'lock' in a piece of code. It will be a race to recruit the Joe Duffy's and Rico Mariani's of the world. All of the IBs will need to recognize the need for these kind of people, and adapt themselves so that these people will not feel stiffled within a Wall Street environment.

©2006 Marc Adler - All Rights Reserved

Funnier still is that we share almost the same technology stack, from front to back. I am sure that right after the conference call that we have with our market data infrastructure vendor, Chris is on the same call an hour later.

Craig mentions that there are only about 200 market data specialists in the whole world. Everyone probably knows what eachother is doing. The same thing goes on in Wall Street, and by extension, the City. The after-bonus-shuffle will take place in another 4 months, and it's an opportunity for every company to find out what every other company is doing.